Everyone is containerizing these days. Sometimes he does it better, sometimes worse … and sometimes he just claims he does it 😉 Here at NubiSoft we try to do our best. Not only because we want customers satisfied with their systems with low maintenance costs. We also do it for ourselves. It just gives us satisfaction. In this article, we consider using the ZFS file system in conjunction with the Docker ecosystem container technology.

A couple of days ago the new LTS version of our beloved OS was released – namely Ubuntu 20.04 LTS (Focal Fossa). It is our favorite OS platform considering servers and one of the most favorite platforms considering the developer’s workstation. This version introduces many interesting changes, including:

- new lock screen,

- …?! @#$%^&*

Really? Let somebody tell me why this month everybody is writing posts about things that can be found in Ubuntu release notes? Does anybody read it?

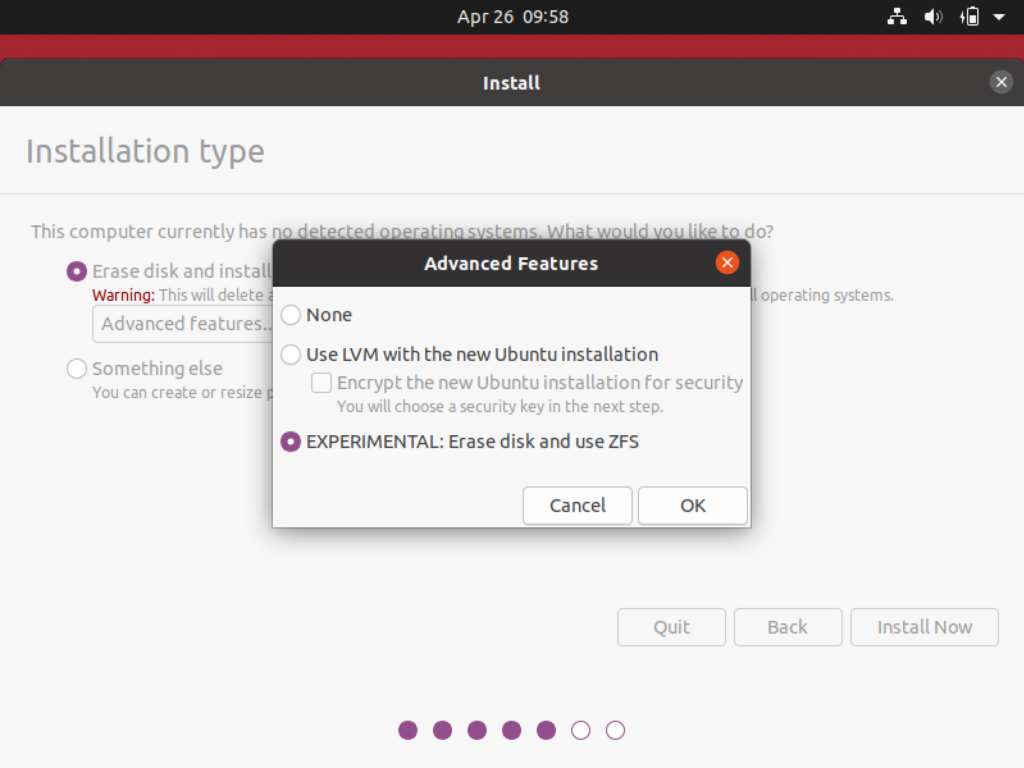

For me, this edition has become an inspiration to look again at the possibility of using the ZFS system in containerization. I was hoping that after version 19.10, where ZFS was introduced only in experimental form, now the time will come for the production version. Unfortunately, this did not happen:

It just so happens that the adoption of this filesystem into the open world of Linux is very painful. There are two reasons for this – the first is legal issues – ZFS was developed by Sun, which was bought by Oracle. And it was Oracle that decided to close the sources. Oracle lost then many great employees with this decision, who decided to leave and develop ZFS sources themselves, but even the earlier ZFS license is not compatible with the GNU Linux license, and to change it would require the consent of all previous ZFS developers – which in practice is not feasible. That is why Linus Torvalds will probably never agree to include ZFS support in the kernel, and its broader justification can be found here. And I understand his point perfectly! Of course, the manufacturers of individual Linux distributions perfectly cope with this lack, allowing ZFS to be installed already at the installation stage as an additional component. This is also what Canonical does with Ubuntu.

The second reason for this difficult ZFS adoption into the Linux world is that ZFS changes everything! It is not only a file system but also an integrated volume manager that allows you to span one file system across multiple block devices, so it allows something (and even something more) that gives LVM. I can’t resist mentioning its most important features:

- Copy-on-write,

- Snapshots,

- Pooled storage,

- Data integrity verification and automatic repair,

- RAID-Z (improvement eliminating the RAID-5 write hole error),

- Compression,

- Deduplication,

- Maximum 16 Exabyte file size,

- Maximum 256 Quadrillion Zettabytes storage.

I first turned my attention to ZFS many years ago, when as a consultant for an IT corporation, I was asked to implement a shared file system for all employees. This was to be implemented on a large DELL server that stood in their server room. Because this resource was supposed to store numerous ISO images and virtual machine images, I began to look for solutions that would allow deduplication. I decided to use FreeNAS, which offered deduplication with the help of ZFS but still implemented in BSD (by the way, many people choose to use BSD instead of Linux only because of its excellent ZFS implementation). It turned out that using FreeNAS allowed me to meet all my assumptions. At least all … except for deduplication 😉 This happened because the effective launch of this function required huge memory resources, which the server shared with me was not equipped. And so we stayed with the FreeNAS platform because at that time we did not find better solutions for software implementation of deduplication.

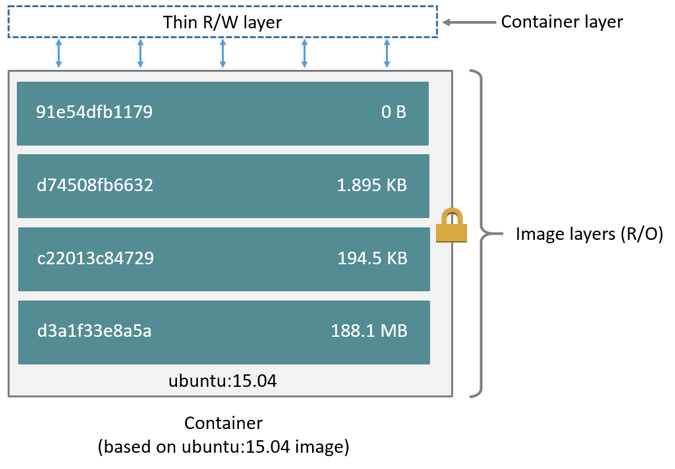

Years have passed, technologies have evolved, RedHat bought Ansible, IBM bought RedHat 😉 And today we have containerization in which Docker leads the way. For the purposes of these considerations, it is worth recalling that the images of Docker containers are composed of layers – i.e. each subsequent command from Dockerfile adds another layer to the image. And launching the container adds another layer describing its current state. You can read about it in detail in the documentation itself, and I only present below the picture borrowed from this documentation 🙂

This gives, among other things, the advantage that by launching many twin containers, their image layers are shared, and only separate state layers are created for each of them. This is a very big saving for the operating system. The standard implementation of this layer mechanism is to create a directory for each of them, and a state directory is created at the top. The container process, wanting to get to a specific file, must search in order from the highest to the lowest layers in search of the needed file and stops at the layer in which the needed file is found. If this file has to be modified, it must be copied to the state layer.

This implementation does not seem to be very effective, which is why I have longed for some time looking at the possibility of using a storage driver that can use the native ability to create snapshots by some file systems – including the ZFS file system. And indeed – the Docker ecosystem provides such a driver for the ZFS file system.

And now we come to the key questions – why would we use ZFS for Docker and do Docker developers recommend it themselves? For now, the facts are as follows.

- So far, there is no production support for ZFS on Linux platforms, and you probably would not want to run your servers based on the experimental versions of their components?

- In fact, most often containers do not intensively write their state to the file system. Most often, even log files or configuration files are mapped as external volumes. Not to mention the fact that the “business-important state” is saved in databases.

- When implementing cloud solutions, you need to know that often block devices connected to virtual hosts (EC2, VPS, Droplet or what we will call them) are not physical block devices, but solutions that provide already increased availability, etc.

- Serious cloud service providers allow you to increase disk space virtually on the fly. So there is no need to use disk managers such as LVM or ZFS.

- In fact, using ZFS with Docker carries the risk of performance loss, as described by the creators of Docker.

- And last but not least, the ZFS storage driver is not among recommended ones for usage with Ubuntu Distro and Docker Community Edition. See the documentation for more details.

- Putting it all together I would conclude that ZFS in your servers used for Dockerizing and being deployed to the cloud is not something you need nor want.

It is a bit sad that ZFS, although it has so many interesting possibilities, it is difficult for him to break into the open source IT trend. And a little funny that many years ago and now I looked at it in order to improve the mechanisms of modularization (then virtualization and now containerization), but each time a different ZFS option was supposed to help me (then deduplication and now snapshots).

Ok, so it’s time to answer the title question – why Canonical is making efforts to implement ZFS into their Ubuntu?

Above we wrote about server implementations using containerization technology. Although it is currently a mainstream, many are still implementing systems in a traditional way – on their own servers or on servers of traditional colocation services.

And finally the most important! Ubuntu is also a great desktop platform! And here the use of ZFS features can bring real benefits – e.g. Windows has been offering snapshots for a long time and uses similar mechanisms, e.g. for transactional upgrades. Ubuntu and ZFS go the same way. Basic usage of ZFS on Ubuntu features is described here, while the quite new concept of transactional upgrades can be found here.

And you? Do you have any experience using ZFS? Are they good or not very good?

Another view angles

- Of course, if you use Ubuntu Desktop as a virtual machine for development purposes, you still do not need to use ZFS for transactional snapshots. You can do the same from the hypervisor level.

- There also exists, the less complex, but slightly more mature file system in the Linux world – BTRFS. I tried to use it, but it did not gain my acceptance, because the few times it lost its consistency for no apparent reason.

- If not ZFS then what? Nowhere in the post did we mention what we use in our infrastructure solutions. We use the “old fashioned” (means mature) and proved ext4, and so we recommend it for you.

- We are more than happy with one new feature regarding the latest Ubuntu release. This is native support of the NextCloud platform. We love it, and we use it extensively. Here you can read how to deploy to the cloud on your own.